About microbatch incremental models beta

The new microbatch strategy is available in beta for dbt Cloud Versionless and dbt Core v1.9.

If you use a custom microbatch macro, set a distinct behavior flag in your dbt_project.yml to enable batched execution. If you don't have a custom microbatch macro, you don't need to set this flag as dbt will handle microbatching automatically for any model using the microbatch strategy.

Read and participate in the discussion: dbt-core#10672

Refer to Supported incremental strategies by adapter for a list of supported adapters.

What is "microbatch" in dbt?

Incremental models in dbt are a materialization designed to efficiently update your data warehouse tables by only transforming and loading new or changed data since the last run. Instead of reprocessing an entire dataset every time, incremental models process a smaller number of rows, and then append, update, or replace those rows in the existing table. This can significantly reduce the time and resources required for your data transformations.

Microbatch is an incremental strategy designed for large time-series datasets:

- It relies solely on a time column (

event_time) to define time-based ranges for filtering. Set theevent_timecolumn for your microbatch model and its direct parents (upstream models). Note, this is different topartition_by, which groups rows into partitions. - It complements, rather than replaces, existing incremental strategies by focusing on efficiency and simplicity in batch processing.

- Unlike traditional incremental strategies, microbatch doesn't require implementing complex conditional logic for backfilling.

- Note, microbatch might not be the best strategy for all use cases. Consider other strategies for use cases such as not having a reliable

event_timecolumn or if you want more control over the incremental logic. Read more in Howmicrobatchcompares to other incremental strategies.

How microbatch works

When dbt runs a microbatch model — whether for the first time, during incremental runs, or in specified backfills — it will split the processing into multiple queries (or "batches"), based on the event_time and batch_size you configure.

Each "batch" corresponds to a single bounded time period (by default, a single day of data). Where other incremental strategies operate only on "old" and "new" data, microbatch models treat every batch as an atomic unit that can be built or replaced on its own. Each batch is independent and idempotent.

This is a powerful abstraction that makes it possible for dbt to run batches separately, concurrently, and retry them independently.

Example

A sessions model aggregates and enriches data that comes from two other models:

page_viewsis a large, time-series table. It contains many rows, new records almost always arrive after existing ones, and existing records rarely update. It uses thepage_view_startcolumn as itsevent_time.customersis a relatively small dimensional table. Customer attributes update often, and not in a time-based manner — that is, older customers are just as likely to change column values as newer customers. The customers model doesn't configure anevent_timecolumn.

As a result:

- Each batch of

sessionswill filterpage_viewsto the equivalent time-bounded batch. - The

customerstable isn't filtered, resulting in a full scan for every batch.

In addition to configuring event_time for the target table, you should also specify it for any upstream models that you want to filter, even if they have different time columns.

models:

- name: page_views

config:

event_time: page_view_start

We run the sessions model for October 1, 2024, and then again for October 2. It produces the following queries:

- Model definition

- Compiled (Oct 1, 2024)

- Compiled (Oct 2, 2024)

The event_time for the sessions model is set to session_start, which marks the beginning of a user’s session on the website. This setting allows dbt to combine multiple page views (each tracked by their own page_view_start timestamps) into a single session. This way, session_start differentiates the timing of individual page views from the broader timeframe of the entire user session.

{{ config(

materialized='incremental',

incremental_strategy='microbatch',

event_time='session_start',

begin='2020-01-01',

batch_size='day'

) }}

with page_views as (

-- this ref will be auto-filtered

select * from {{ ref('page_views') }}

),

customers as (

-- this ref won't

select * from {{ ref('customers') }}

),

select

page_views.id as session_id,

page_views.page_view_start as session_start,

customers.*

from page_views

left join customers

on page_views.customer_id = customer.id

with page_views as (

select * from (

-- filtered on configured event_time

select * from "analytics"."page_views"

where page_view_start >= '2024-10-01 00:00:00' -- Oct 1

and page_view_start < '2024-10-02 00:00:00'

)

),

customers as (

select * from "analytics"."customers"

),

...

with page_views as (

select * from (

-- filtered on configured event_time

select * from "analytics"."page_views"

where page_view_start >= '2024-10-02 00:00:00' -- Oct 2

and page_view_start < '2024-10-03 00:00:00'

)

),

customers as (

select * from "analytics"."customers"

),

...

dbt will instruct the data platform to take the result of each batch query and insert, update, or replace the contents of the analytics.sessions table for the same day of data. To perform this operation, dbt will use the most efficient atomic mechanism for "full batch" replacement that is available on each data platform.

It does not matter whether the table already contains data for that day. Given the same input data, the resulting table is the same no matter how many times a batch is reprocessed.

Each batch of sessions filters page_views to the matching time-bound batch, but doesn't filter sessions, performing a full scan for each batch.

Each batch of sessions filters page_views to the matching time-bound batch, but doesn't filter sessions, performing a full scan for each batch.Relevant configs

Several configurations are relevant to microbatch models, and some are required:

| Config | Description | Default | Type | Required |

|---|---|---|---|---|

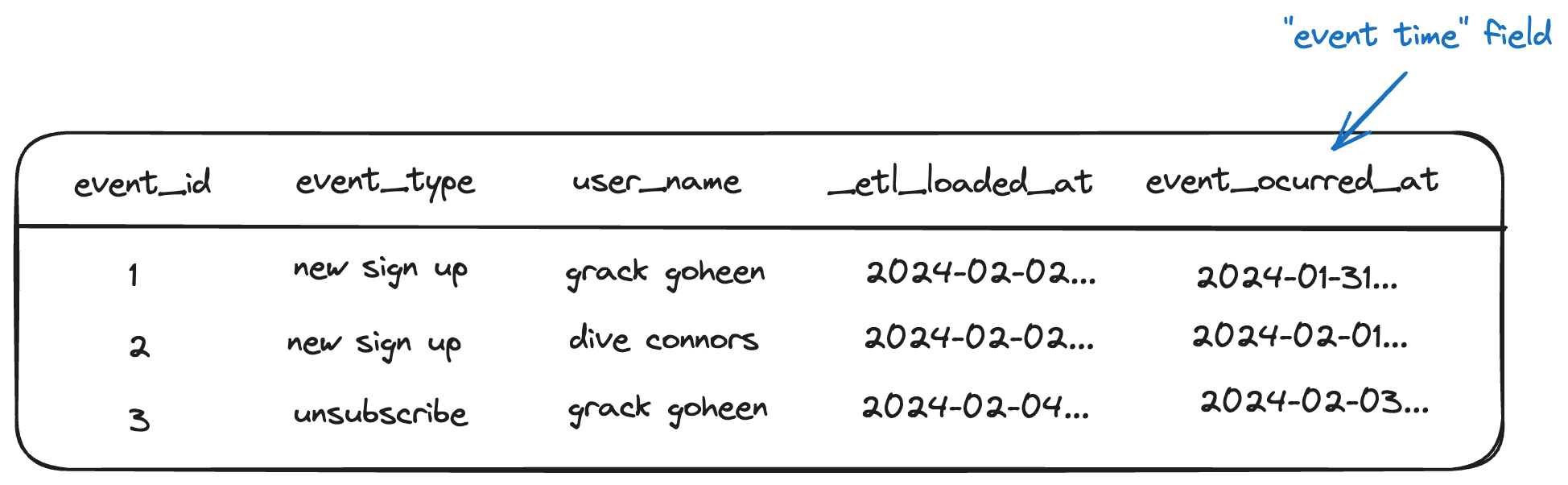

event_time | The column indicating "at what time did the row occur." Required for your microbatch model and any direct parents that should be filtered. | N/A | Column | Required |

begin | The "beginning of time" for the microbatch model. This is the starting point for any initial or full-refresh builds. For example, a daily-grain microbatch model run on 2024-10-01 with begin = '2023-10-01 will process 366 batches (it's a leap year!) plus the batch for "today." | N/A | Date | Required |

batch_size | The granularity of your batches. Supported values are hour, day, month, and year | N/A | String | Required |

lookback | Process X batches prior to the latest bookmark to capture late-arriving records. | 1 | Integer | Optional |

Required configs for specific adapters

Some adapters require additional configurations for the microbatch strategy. This is because each adapter implements the microbatch strategy differently.

The following table lists the required configurations for the specific adapters, in addition to the standard microbatch configs:

| Adapter | unique_key config | partition_by config |

|---|---|---|

dbt-postgres | ✅ Required | N/A |

dbt-spark | N/A | ✅ Required |

dbt-bigquery | N/A | ✅ Required |

For example, if you're using dbt-postgres, configure unique_key as follows:

{{ config(

materialized='incremental',

incremental_strategy='microbatch',

unique_key='sales_id', ## required for dbt-postgres

event_time='transaction_date',

begin='2023-01-01',

batch_size='day'

) }}

select

sales_id,

transaction_date,

customer_id,

product_id,

total_amount

from {{ source('sales', 'transactions') }}

In this example, unique_key is required because dbt-postgres microbatch uses the merge strategy, which needs a unique_key to identify which rows in the data warehouse need to get merged. Without a unique_key, dbt won't be able to match rows between the incoming batch and the existing table.

Full refresh

As a best practice, we recommend configuring full_refresh: False on microbatch models so that they ignore invocations with the --full-refresh flag. If you need to reprocess historical data, do so with a targeted backfill that specifies explicit start and end dates.

Usage�

You must write your model query to process (read and return) exactly one "batch" of data. This is a simplifying assumption and a powerful one:

- You don’t need to think about

is_incrementalfiltering - You don't need to pick among DML strategies (upserting/merging/replacing)

- You can preview your model, and see the exact records for a given batch that will appear when that batch is processed and written to the table

When you run a microbatch model, dbt will evaluate which batches need to be loaded, break them up into a SQL query per batch, and load each one independently.

dbt will automatically filter upstream inputs (source or ref) that define event_time, based on the lookback and batch_size configs for this model.

During standard incremental runs, dbt will process batches according to the current timestamp and the configured lookback, with one query per batch.

Note: If there’s an upstream model that configures event_time, but you don’t want the reference to it to be filtered, you can specify ref('upstream_model').render() to opt-out of auto-filtering. This isn't generally recommended — most models that configure event_time are fairly large, and if the reference is not filtered, each batch will perform a full scan of this input table.

Backfills

Whether to fix erroneous source data or retroactively apply a change in business logic, you may need to reprocess a large amount of historical data.

Backfilling a microbatch model is as simple as selecting it to run or build, and specifying a "start" and "end" for event_time. Note that --event-time-start and --event-time-end are mutually necessary, meaning that if you specify one, you must specify the other.

As always, dbt will process the batches between the start and end as independent queries.

dbt run --event-time-start "2024-09-01" --event-time-end "2024-09-04"

Retry

If one or more of your batches fail, you can use dbt retry to reprocess only the failed batches.

Timezones

For now, dbt assumes that all values supplied are in UTC:

event_timebegin--event-time-start--event-time-end

While we may consider adding support for custom time zones in the future, we also believe that defining these values in UTC makes everyone's lives easier.

How microbatch compares to other incremental strategies?

Most incremental models rely on the end user (you) to explicitly tell dbt what "new" means, in the context of each model, by writing a filter in an {% if is_incremental() %} conditional block. You are responsible for crafting this SQL in a way that queries {{ this }} to check when the most recent record was last loaded, with an optional look-back window for late-arriving records.

Other incremental strategies will control how the data is being added into the table — whether append-only insert, delete + insert, merge, insert overwrite, etc — but they all have this in common.

As an example:

{{

config(

materialized='incremental',

incremental_strategy='delete+insert',

unique_key='date_day'

)

}}

select * from {{ ref('stg_events') }}

{% if is_incremental() %}

-- this filter will only be applied on an incremental run

-- add a lookback window of 3 days to account for late-arriving records

where date_day >= (select {{ dbt.dateadd("day", -3, "max(date_day)") }} from {{ this }})

{% endif %}

For this incremental model:

- "New" records are those with a

date_daygreater than the maximumdate_daythat has previously been loaded - The lookback window is 3 days

- When there are new records for a given

date_day, the existing data fordate_dayis deleted and the new data is inserted

Let’s take our same example from before, and instead use the new microbatch incremental strategy:

{{

config(

materialized='incremental',

incremental_strategy='microbatch',

event_time='event_occured_at',

batch_size='day',

lookback=3,

begin='2020-01-01',

full_refresh=false

)

}}

select * from {{ ref('stg_events') }} -- this ref will be auto-filtered

Where you’ve also set an event_time for the model’s direct parents - in this case, stg_events:

models:

- name: stg_events

config:

event_time: my_time_field

And that’s it!

When you run the model, each batch templates a separate query. For example, if you were running the model on October 1, dbt would template separate queries for each day between September 28 and October 1, inclusive — four batches in total.

The query for 2024-10-01 would look like:

select * from (

select * from "analytics"."stg_events"

where my_time_field >= '2024-10-01 00:00:00'

and my_time_field < '2024-10-02 00:00:00'

)

Based on your data platform, dbt will choose the most efficient atomic mechanism to insert, update, or replace these four batches (2024-09-28, 2024-09-29, 2024-09-30, and 2024-10-01) in the existing table.